When new technologies arrive, we often meet them with caution. That instinct has served us well in education, fending off many (not all) of the educational fads and technological gimmicks that periodically come our way. Beyond education, every major shift in how we record, communicate, or process ideas usually brings anxiety about change and what might be lost.

However, despite Socrates’s fears that writing would weaken memory, or many teachers’ worries that calculators would make their students stupid, science, progress and development have continued to thrive. In fact, the correlation between technology adoption and anything from levels of literacy to infant mortality, from life expectancy to scientific discovery, couldn’t be stronger.

Today similar questions arise about artificial intelligence. If AI can plan, summarise, or even write, does that risk weakening our ability to think for ourselves?

It’s a reasonable question. But my experience suggests that, used thoughtfully, AI can do the opposite. It can help us think better by making our reasoning more visible, by prompting reflection, and by challenging what we take for granted. In that sense, AI can act as a thinking buddy, not a replacement for thought.

Thinking With, Not Instead Of

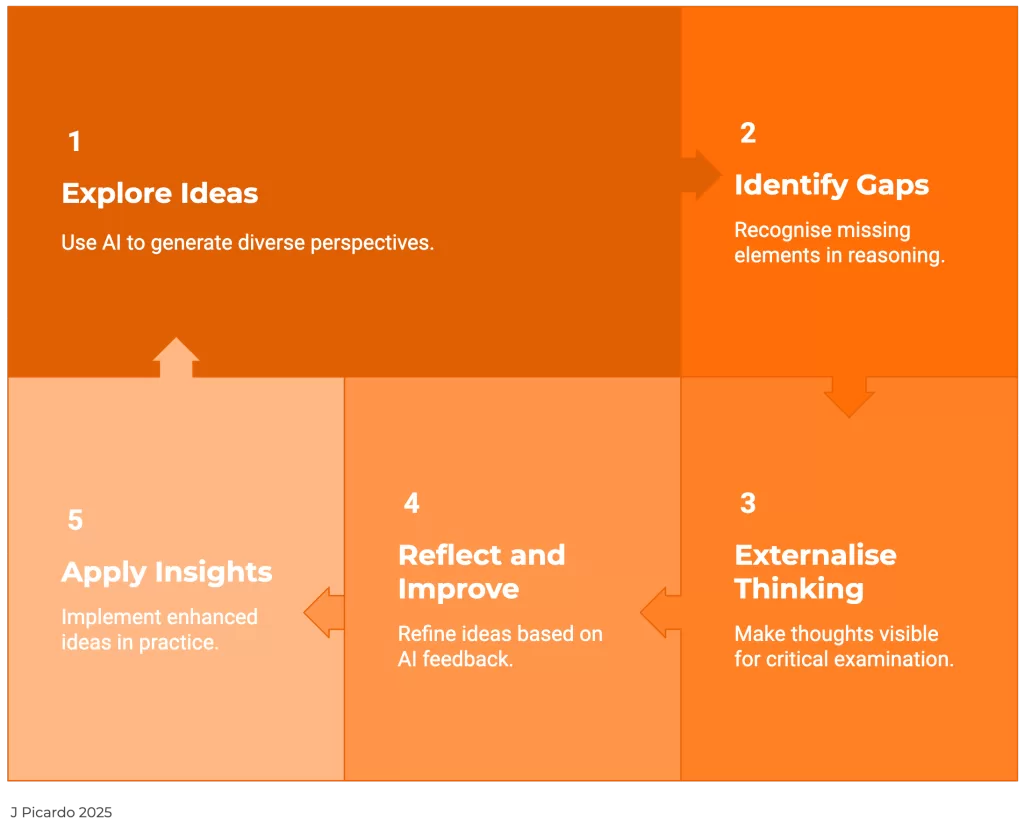

When I use AI to explore an idea or draft a paragraph, I’m not outsourcing my reasoning; I’m interrogating it. Asking a model to offer an alternative view or summarise my argument helps me notice gaps or assumptions I hadn’t seen. It externalises my thinking so I can examine it more critically. As someone who is not able to turn to a colleague and ask for their perspective, this has proved invaluable to me.

There’s no reason why this can’t be useful for teachers, and leaders too. AI can act as a reflective partner in lesson planning, curriculum design, or school improvement. Another way to ask, “What am I missing?” or “What would this look like from another angle?”

For students, it can model metacognition. Rather than using AI to produce answers, we can use it to question them, and to critique, compare, and improve reasoning. In doing so, students learn that thinking is a process, not a product.

Why the Fears Persist

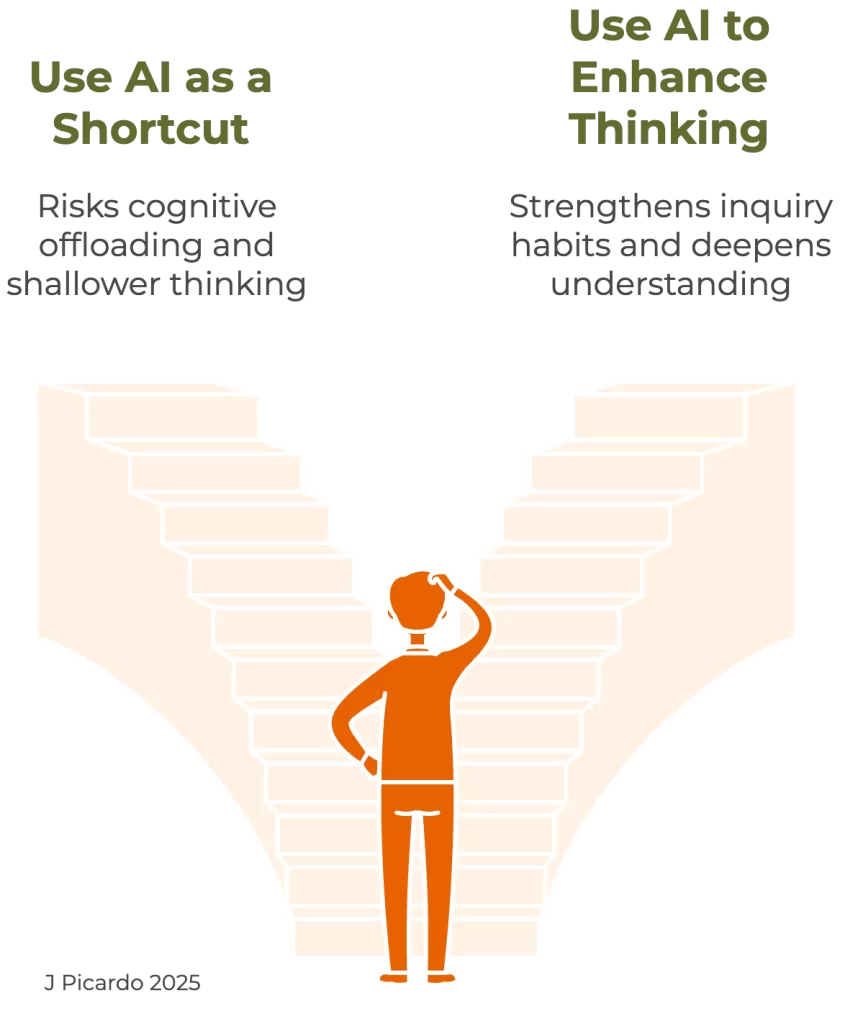

Concerns about AI are not unfounded. Psychologists warn of cognitive offloading: when we rely too heavily on tools, we risk losing the underlying skill. That’s why it matters to distinguish between delegating a task and delegating the act of thinking itself.

If we treat AI as a shortcut, it will make us shallower thinkers. But if we use it to examine our thinking and to test ideas, check reasoning, or explore alternatives, it can strengthen the habits of inquiry that lie at the heart of good learning.

So the key question is not whether AI changes thinking. It will. The question is whether we choose to use it to deepen or dilute the thinking.

Human Agency and Accountability

Technology has a tendency to amplify intention. Returning to this analogy, a calculator can be a shortcut or a learning aid; it depends how it’s used. The same is true of AI. It reflects our curiosity or our lack of interest. To paraphrase Henry Ford, whether you think AI will help you think more clearly or make you dumber, you’re probably right.

Of course we should also be realistic: tools do shape behaviour as well as reflecting it. The algorithms behind AI systems are designed to maximise engagement and efficiency. And those incentives don’t always align with educational values like patience, depth, and care.

That’s why human agency matters. Users remain accountable for the thinking and ethical judgements that AI cannot make.

Equity, Ethics, and Perspective

Technology is never neutral, and AI is no exception. Its responses reflect the data and assumptions on which it was trained, often privileging dominant cultural and linguistic perspectives.

Questions of bias, privacy, ownership, and access all matter. Confidence, cost, and connectivity still shape who can use these tools well. Professional learning, transparency, and inclusivity will be essential if AI is to support thinking rather than entrench inequality.

These issues warrant deeper exploration elsewhere. Here, the focus remains on whether AI can help us think more clearly and critically when used with awareness and intent.

Conditions for Thoughtful Use

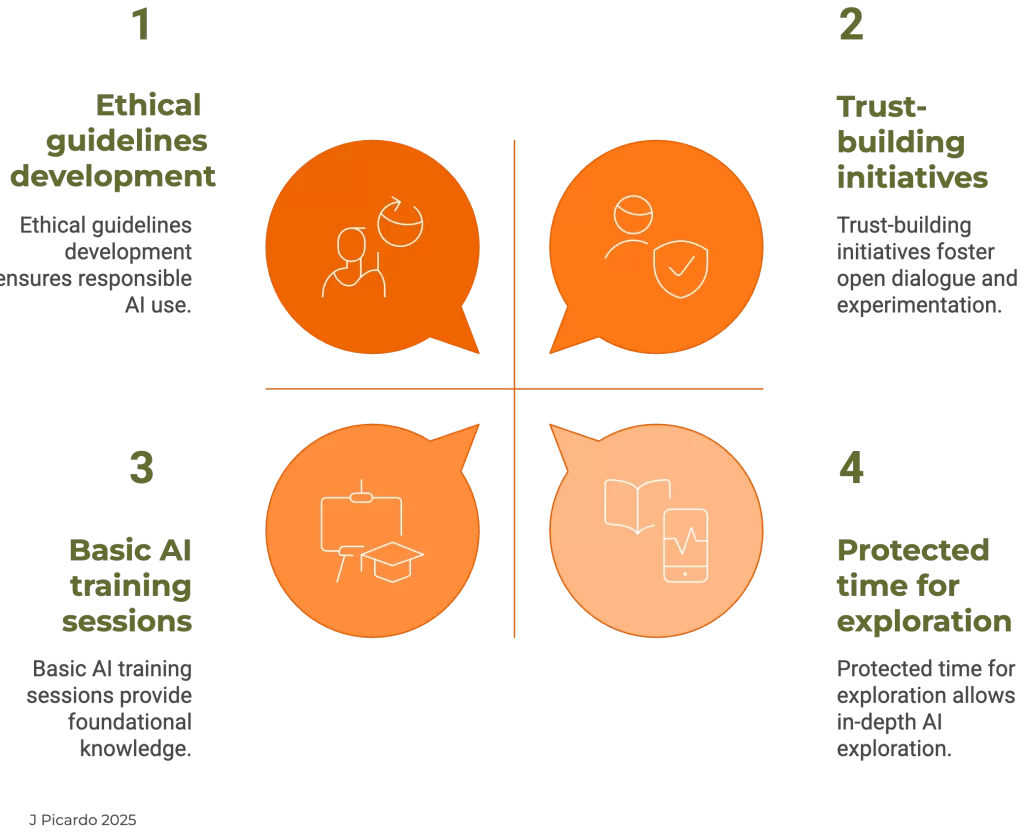

For AI to act as a thinking partner rather than a thinking substitute, schools need deliberate conditions that make reflective experimentation possible. These aren’t new ideas. They’re the same foundations that underpin good professional learning.

-

- Time: Teachers and leaders need protected space to explore AI without fear of judgment or performance pressure. Reflection takes time; understanding how AI can genuinely enhance thinking requires curiosity, iteration, and the freedom to make mistakes.

- Trust: A culture of psychological safety allows staff to discuss uncertainty and share insights openly. When leaders model inquiry instead of certainty, it signals that experimentation is valued over perfection.

- Training: Professional development should focus not just on how AI works, but on why and when to use it. The goal is not competence alone but pedagogical discernment: knowing when technology supports learning and when it distracts from it.

- Transparency: Clear principles for ethical and responsible use are vital, particularly around assessment, authorship, and data handling. Openness builds confidence and helps everyone navigate boundaries with integrity.

These conditions don’t only support thoughtful AI adoption; they strengthen the habits of reflection, dialogue, and inquiry that already define effective teaching and leadership.

A Realistic Balance

AI is neither the end of independent thought nor a magical shortcut to insight. It’s a mirror. It reflects back what we bring to it: our knowledge, our biases, our curiosity, or our lack thereof.

For me, its greatest value lies in the way it makes my thinking visible. It lets me test an idea before I commit to it, or see it from an angle I might have missed. In that sense, AI can reinforce precisely what good education has always aimed for: clarity of thought, critical questioning, and reflective practice.

If used responsibly—and I recognise the heavy lifting if is doing here—AI will not replace our capacity for thought, but it will hold a mirror up to it. The challenge then is not to think less, but to think better: to bring discernment, curiosity, humanity, and conscience to the partnership.

“We are stuck with technology when what we really want is just stuff that works.”

— Douglas Adams

Photo by Karola G